# Run this cell to install the latest version of fastcore shared on github

# !pip install git+https://github.com/fastai/fastai2.git

# Run this cell to install the latest version of fastcore shared on github

# !pip install git+https://github.com/fastai/fastcore.git

# Run this cell to install the latest version of timeseries shared on github

# !pip install git+https://github.com/ai-fast-track/timeseries.git

%reload_ext autoreload

%autoreload 2

%matplotlib inline

from timeseries.all import *

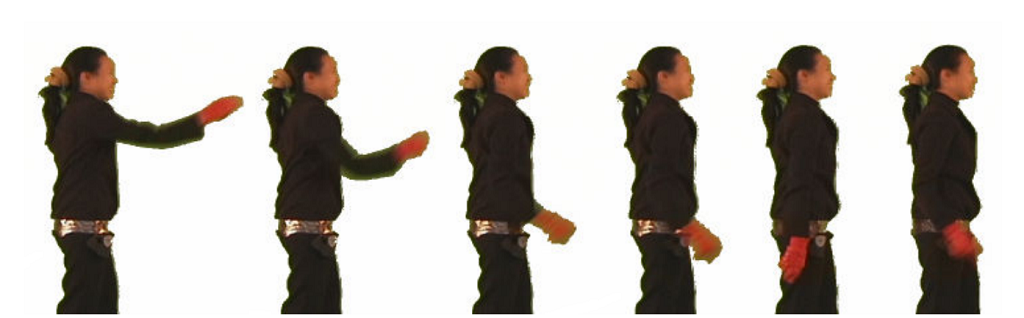

GunPoint Dataset

The dataset was obtained by capturing two actors transiting between yoga poses in front of a green screen. The problem is to discriminate between one actor (male) and another (female). Each image was converted to a one dimensional series by finding the outline and measuring the distance of the outline to the centre.

# You can choose any of univariate dataset listed the `data.py` file

dsname = 'GunPoint'

# url = 'http://www.timeseriesclassification.com/Downloads/GunPoint.zip'

path = unzip_data(URLs_TS.UNI_GUN_POINT)

path

fname_train = f'{dsname}_TRAIN.arff'

fname_test = f'{dsname}_TEST.arff'

fnames = [path/fname_train, path/fname_test]

fnames

data = TSData.from_arff(fnames)

print(data)

items = data.get_items()

# dls = TSDataLoaders.from_files(fnames=fnames, batch_tfms=batch_tfms, num_workers=0, device=default_device())

dls = TSDataLoaders.from_files(bs=64, fnames=fnames, num_workers=0, device=default_device())

dls.show_batch(max_n=9)

# Number of channels (i.e. dimensions in ARFF and TS files jargon)

c_in = get_n_channels(dls.train) # data.n_channels

# Number of classes

c_out= dls.c

c_in,c_out

model = inception_time(c_in, c_out).to(device=default_device())

# model

# opt_func = partial(Adam, lr=3e-3, wd=0.01)

#Or use Ranger

def opt_func(p, lr=slice(3e-3)): return Lookahead(RAdam(p, lr=lr, mom=0.95, wd=0.01))

#Learner

loss_func = LabelSmoothingCrossEntropy()

learn = Learner(dls, model, opt_func=opt_func, loss_func=loss_func, metrics=accuracy)

# print(learn.summary())

lr_min, lr_steep = learn.lr_find()

lr_min, lr_steep

epochs=24; lr_max=1e-3

learn.fit_one_cycle(epochs, lr_max=lr_max)

learn.recorder.plot_loss()

# learn.show_results(max_n=9)

interp = ClassificationInterpretation.from_learner(learn)

interp.plot_confusion_matrix()

interp.most_confused()

model = learn.model.eval()

model[5]

model[6]

User defined CAM method

The users can defined their own CAM method and plug it in the

show_cam()method. Check out bothcam_actsandgrad_cam_actsto see how easy you can create your own CAM function

def _listify(o):

if o is None: return []

if isinstance(o, list): return o

# if is_iter(o): return list(o)

return [o]

dls.test = dls.train.new(bs=3)

batch = dls.test.one_batch()

# type(batch), len(batch), type(batch[0]), batch

# b = itemize(batch)

# print(b)

# tss, ys = batch

# tss, ys

b = itemize(batch)

# b[0]

default_device()

idxs = [0,3]

x, y = get_batch(dls.train.dataset, idxs)

x[0].device, x[0]

list_items = get_list_items(dls.train.dataset, idxs)

# list_items

tdl = TfmdDL(list_items, bs=2, num_workers=0)

tdl.to(default_device())

batch = tdl.one_batch()

# batch[0][0].device, batch[0], batch[1]

dls.vocab

# x1 correspond to Gun

x1, y1 = dls.train.dataset[0]

x1.shape,y1

# x1

y1.data.item()

# x2 corresponds to Point

x2, y2 = dls.train.dataset[3]

x2.shape, x2, y2

i2o(y1), i2o(y2)

dls.tfms[1][1].decodes(y1)

# len((m.layers))

idxs = [0,3]

batch = get_batch(dls.train.dataset, idxs)

batch[0][0].device, batch[1], len(batch), type(batch)

test_eq(dls.train.dataset[0][1], batch[1][0])

test_eq(dls.train.dataset[3][1], batch[1][1])

# dls.train.dataset[0][1], dls.train.dataset[3][1]

test_eq(len(batch), 2)

test_eq(isinstance(batch, list), True)

test_eq(isinstance(batch, tuple), False)

Class Activation Map (CAM)

This option calculates the activations values at the selected layer.By default the activations curves are plotted in one single figure.

func_cam=cam_acts : activation function name (activation values at the chosen model layer). It is the default value

The figure title [Gun - Point] - CAM - mean should be read as follow:

Gun: class of the first curvePoint: class of the second curveCAM: activation function name (activation values at the chosen model layer)mean: type of reduction (read the explanation below: 4 types of reductions)

# Gun - CAM - mean

show_cam(batch, model, layer=5, i2o=i2o, func_cam=cam_acts) # default: func_cam=cam_acts, multi_fig=False, figsize=(6,4)

# Gun - CAM - mean

show_cam(batch, model, layer=5, i2o=i2o, multi_fig=True) # default: func_cam=cam_acts, figsize=(13,4)

#CAM - mean

show_cam(batch, model, layer=5, i2o=i2o, func_cam=grad_cam_acts)

Using RAW activation values: force_scale=False (Non-scaled values)

By default, both func_cam=grad_cam_acts (GRAD-CAM) and reduction=mean are used

In this example, we are plotting the raw activation values (by default GRAD-CAM). Notice the values on the cmap color palette.

We can supply a user-defined

func_cam. See here below an example with a custom defined functioncam_acts_1Pay attention to the

scalevalues. Instead of being between [0..1], they are between th min and the max of the activation raw values

#CAM - max.

show_cam(batch, model, layer=5, i2o=i2o, func_cam=grad_cam_acts, force_scale=False, cmap=CMAP.seismic)

Using Scaled activation values: force_scale=False (default = [0..1])

In this example, we are plotting the raw activation values (by default GRAD-CAM). Notice the values on the cmap color palette bounds: [0..1]. This scale range is the default one. We can supply the argument scale_range=(0,2) for example to provide a user-defined range

# Gun - Point - CAM - mean

show_cam(batch,model, layer=5, i2o=i2o, cmap=CMAP.seismic)

4 types of reduction

When raw activities are caluculated, we obtain a tensor of [128, 150]. 128 corresponds to the number of channels. Whereas 150 represents the data points. Since the original time series is a [1, 128] tensor (univariate time series), we need to reduce the [128, 150] tensor to [1, 150]. Therefore, we have several types of reductions.

show_cam() offers 4 types of reductions:

mean(default)medianmaxmean_max(average of mean and max values)

show_cam(batch, model, layer=5, i2o=i2o)

show_cam(batch, model, layer=5, i2o=i2o, func_cam=grad_cam_acts, reduction='max')

show_cam(batch, model, layer=5, i2o=i2o, func_cam=grad_cam_acts, reduction='median')

show_cam(batch, model, layer=5, i2o=i2o, func_cam=grad_cam_acts, reduction='mean_max')

show_cam(batch, model, layer=5, i2o=i2o, linewidth=2, scatter=True)

dls.train = dls.train.new(bs=5)

batch = dls.train.one_batch()

batch[0][0].device

show_cam(batch, model, layer=5, i2o=i2o, cmap=CMAP.viridis)

show_cam(batch, model, layer=5, i2o=i2o, cmap=CMAP.viridis, multi_fig=True, figsize=(18, 8))

# dls.train.dataset[0]

idxs = [0]

batch = get_batch(dls.train.dataset, idxs)

batch[0][0].device, batch[1], len(batch), type(batch)

show_cam(batch, model, layer=5, i2o=i2o, cmap=CMAP.rainbow)

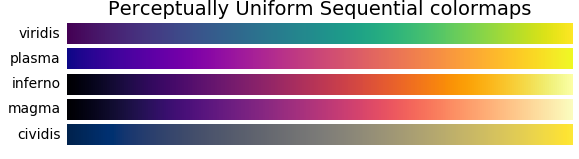

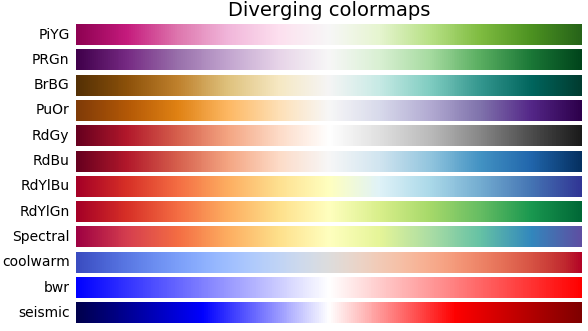

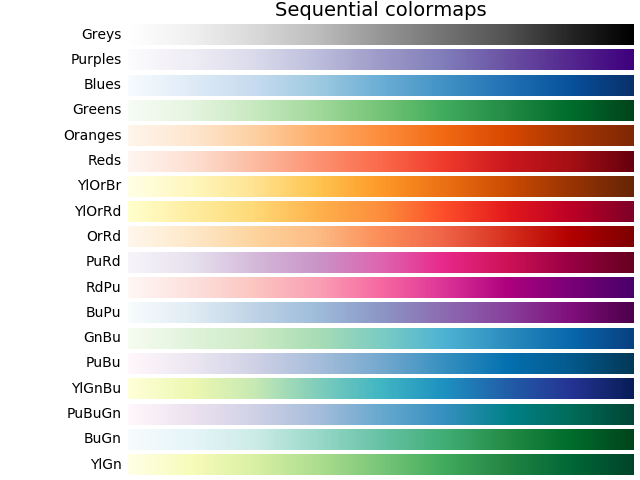

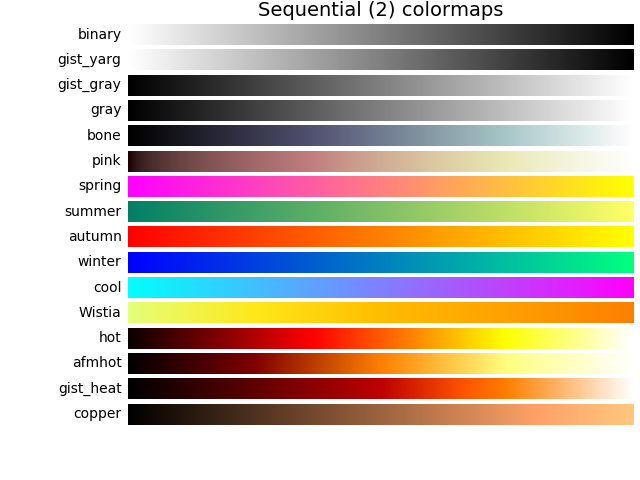

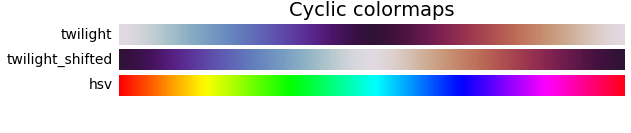

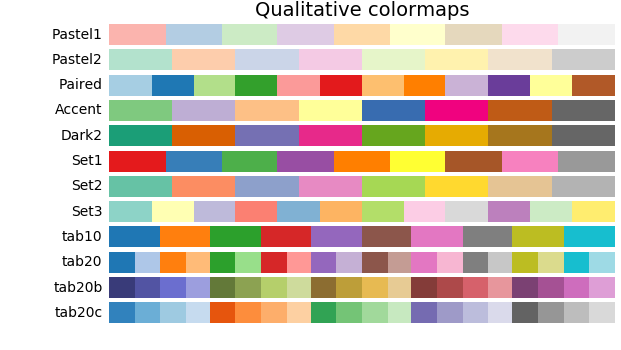

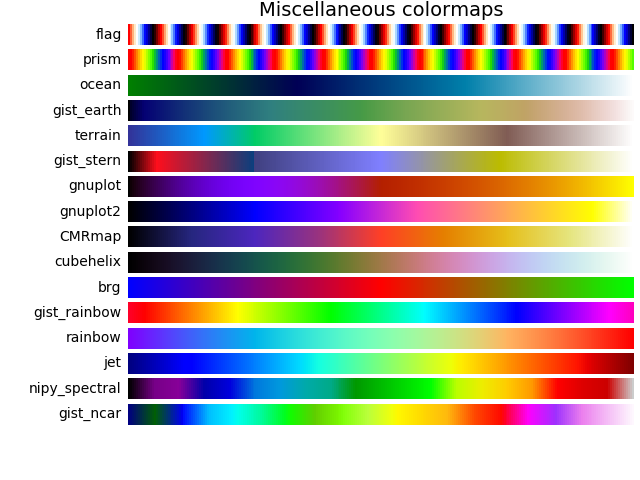

Palettes for cmap

There are

164different palettes. Check outCMAPclass and its autocompletion

idxs = [0, 3]

batch = get_batch(dls.train.dataset, idxs)

show_cam(batch, model, layer=5, i2o=i2o, cmap=CMAP.RdBu_r)

show_cam(batch, model, layer=5, i2o=i2o, cmap=CMAP.viridis)

show_cam(batch, model, layer=5, i2o=i2o, cmap=CMAP.gist_gray_r)

show_cam(batch, model, layer=5, i2o=i2o, linewidth=2, linestyles='solid')

show_cam(batch, model, layer=5, i2o=i2o, linewidth=2, linestyles='dashed')

# Gun - CAM - max

show_cam(batch, model, layer=5, i2o=i2o, linewidth=2, linestyles='dashdot')

# Gun - CAM - max

show_cam(batch, model, layer=5, i2o=i2o, linewidth=2, linestyles='dotted')

#Example using a partial

cam_acts_1 = partial(cam_acts, force_scale=False, scale_range=(0,2))

cam_acts_1.name = r'cam_acts_1'

show_cam(batch, model, layer=5, i2o=i2o, func_cam=cam_acts_1)

# Using different cmap

# **cmap possible values are: **

cmap_list = ['Accent', 'Accent_r', 'Blues', 'Blues_r', 'BrBG', 'BrBG_r', 'BuGn', 'BuGn_r', 'BuPu', 'BuPu_r', 'CMRmap', 'CMRmap_r', 'Dark2', 'Dark2_r', 'GnBu', 'GnBu_r', 'Greens', 'Greens_r', 'Greys', 'Greys_r', 'OrRd', 'OrRd_r', 'Oranges', 'Oranges_r', 'PRGn', 'PRGn_r', 'Paired', 'Paired_r', 'Pastel1', 'Pastel1_r', 'Pastel2', 'Pastel2_r', 'PiYG', 'PiYG_r', 'PuBu', 'PuBuGn', 'PuBuGn_r', 'PuBu_r', 'PuOr', 'PuOr_r', 'PuRd', 'PuRd_r', 'Purples', 'Purples_r', 'RdBu', 'RdBu_r', 'RdGy', 'RdGy_r', 'RdPu', 'RdPu_r', 'RdYlBu', 'RdYlBu_r', 'RdYlGn', 'RdYlGn_r', 'Reds', 'Reds_r', 'Set1', 'Set1_r', 'Set2', 'Set2_r', 'Set3', 'Set3_r', 'Spectral', 'Spectral_r', 'Wistia', 'Wistia_r', 'YlGn', 'YlGnBu', 'YlGnBu_r', 'YlGn_r', 'YlOrBr', 'YlOrBr_r', 'YlOrRd', 'YlOrRd_r', 'afmhot', 'afmhot_r', 'autumn', 'autumn_r', 'binary', 'binary_r', 'bone', 'bone_r', 'brg', 'brg_r', 'bwr', 'bwr_r', 'cividis', 'cividis_r', 'cool', 'cool_r', 'coolwarm', 'coolwarm_r', 'copper', 'copper_r', 'cubehelix', 'cubehelix_r', 'flag', 'flag_r', 'gist_earth', 'gist_earth_r', 'gist_gray', 'gist_gray_r', 'gist_heat', 'gist_heat_r', 'gist_ncar', 'gist_ncar_r', 'gist_rainbow', 'gist_rainbow_r', 'gist_stern', 'gist_stern_r', 'gist_yarg', 'gist_yarg_r', 'gnuplot', 'gnuplot2', 'gnuplot2_r', 'gnuplot_r', 'gray', 'gray_r', 'hot', 'hot_r', 'hsv', 'hsv_r', 'inferno', 'inferno_r', 'jet', 'jet_r', 'magma', 'magma_r', 'nipy_spectral', 'nipy_spectral_r', 'ocean', 'ocean_r', 'pink', 'pink_r', 'plasma', 'plasma_r', 'prism', 'prism_r', 'rainbow', 'rainbow_r', 'seismic', 'seismic_r', 'spring', 'spring_r', 'summer', 'summer_r', 'tab10', 'tab10_r', 'tab20', 'tab20_r', 'tab20b', 'tab20b_r', 'tab20c', 'tab20c_r', 'terrain', 'terrain_r', 'twilight', 'twilight_r', 'twilight_shifted', 'twilight_shifted_r', 'viridis', 'viridis_r', 'winter', 'winter_r']

cmaps = [f'{n} = \'{n}\'' for n in cmap_list]

# cmaps