# Run this cell to install the latest version of fastai2 shared on github

!pip install git+https://github.com/fastai/fastai2.git

# Run this cell to install the latest version of fastcore shared on github

!pip install git+https://github.com/fastai/fastcore.git

# Run this cell to install the latest version of timeseries shared on github

!pip install git+https://github.com/ai-fast-track/timeseries.git

%reload_ext autoreload

%autoreload 2

%matplotlib inline

from fastai2.basics import *

from timeseries.all import *

Class Activation Map (CAM) and Grafient-CAM (GRAD-CAM) Tutorial

Both CAM and GRAD-CAM allow producing ‘visual explanations’ on how a Convolutional Neural Network (CNN) model based its classification and therefore help interpreting the obtained results. The InceptionTime model is used as an illustration in this notebook.

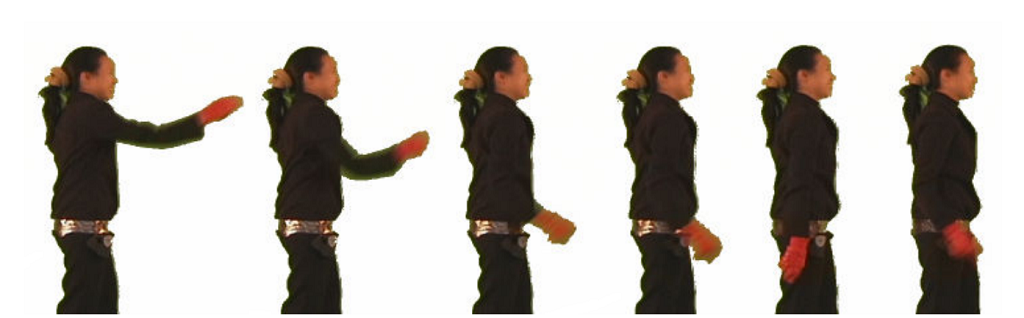

GunPoint Dataset

This dataset involves one female actor and one male actor making a motion with their hand. The two classes are:Gun-Draw and Point:For Gun-Draw the actors have their hands by their sides. They draw a replicate gun from a hip-mounted holster, point it at a target for approximately one second, then return the gun to the holster, and their hands to their sides. For Point the actors have their gun by their sides. They point with their index fingers to a target for approximately one second, and then return their hands to their sides. For both classes, we tracked the centroid of the actor's right hands in both X- and Y-axes, which appear to be highly correlated. The data in the archive is just the X-axis.

# You can choose any of univariate dataset listed the `data.py` file

path = unzip_data(URLs_TS.UNI_GUN_POINT)

dsname = 'GunPoint'

fname_train = f'{dsname}_TRAIN.arff'

fname_test = f'{dsname}_TEST.arff'

fnames = [path/fname_train, path/fname_test]

fnames

# num_workers=0 is for Windows platform

dls = TSDataLoaders.from_files(bs=64,fnames=fnames, num_workers=0)

dls.show_batch(max_n=9)

learn = ts_learner(dls)

learn.fit_one_cycle(25, lr_max=1e-3)

learn.show_results()

model = learn.model.eval()

model[5]

# i2o() function

# Converting CategoryTensor label into the human-readable label

lbl_dict = dict([

(0, 'Gun'),

(1, 'Point')]

)

def i2o(y):

return lbl_dict.__getitem__(y.data.item())

# return lbl_dict.__getitem__(int(dls.tfms[1][1].decodes(y)))

idxs = [0,3]

batch = get_batch(dls.train.dataset, idxs)

# len(batch), type(batch)

Class Activation Map (CAM)

This option calculates the activations values at the selected layer.By default the activations curves are plotted in one single figure.

func_cam=cam_acts : activation function name (activation values at the chosen model layer). It is the default value

The figure title [Gun - Point] - CAM - mean should be read as follow:

Gun: class of the first curvePoint: class of the second curveCAM: activation function name (activation values at the chosen model layer)mean: type of reduction (read the explanation below: 4 types of reductions)

show_cam(batch, model, layer=5, i2o=i2o, func_cam=grad_cam_acts) # default: func_cam=cam_acts, multi_fig=False, figsize=(6,4)

show_cam(batch, model, layer=5, i2o=i2o, multi_fig=True) # default: func_cam=cam_acts, figsize=(13,4)

show_cam(batch, model, layer=5, i2o=i2o, func_cam=cam_acts, reduction='max', cmap=CMAP.seismic)

Using RAW activation values: force_scale=False (Non-scaled values)

By default, both func_cam=grad_cam_acts (GRAD-CAM) and reduction=mean are used

In this example, we are plotting the raw activation values (by default GRAD-CAM). Notice the values on the cmap color palette.

We can supply a user-defined

func_cam. See here below an example with a custom defined functioncam_acts_1Pay attention to the

scalevalues. Instead of being between [0..1], they are between th min and the max of the activation raw values

show_cam(batch, model, layer=5, i2o=i2o, func_cam=grad_cam_acts , force_scale=False, cmap='seismic')

4 types of reduction

When raw activities are caluculated, we obtain a tensor of [128, 150]. 128 corresponds to the number of channels. Whereas 150 represents the data points. Since the original time series is a [1, 128] tensor (univariate time series), we need to reduce the [128, 150] tensor to [1, 150]. Therefore, we have several types of reductions.

show_cam() offers 4 types of reductions:

mean(default)medianmaxmean_max(average of mean and max values)

show_cam(batch, model, layer=5, i2o=i2o, func_cam=grad_cam_acts, reduction='mean_max', cmap=CMAP.seismic)

show_cam(batch, model, layer=5, i2o=i2o, linewidth=2, scatter=True, cmap=CMAP.hot_r)

dls.train = dls.train.new(bs=5)

batch = dls.train.one_batch()

show_cam(batch, model, layer=5, i2o=i2o, cmap=CMAP.viridis)

show_cam(batch, model, layer=5, i2o=i2o, cmap='viridis', multi_fig=True, figsize=(18, 8), linestyles='dotted')

idxs = [0]

batch = get_batch(dls.train.dataset, idxs)

show_cam(batch, model, layer=5, i2o=i2o, cmap='rainbow')

Palette (cmap), Line width, and Line Styles

There are

164different palettes. Check outCMAPclass and its autocompletionline styles :'solid' | 'dashed' | 'dashdot' | 'dotted'

# reload the batch with 2 items used earlier

idxs = [0, 3]

batch = get_batch(dls.train.dataset, idxs)

show_cam(batch, model, layer=5, i2o=i2o, cmap='RdBu_r', linewidth=2, linestyles='dotted')

show_cam(batch, model, layer=5, i2o=i2o, cmap='gist_gray_r')